Recognizing facial expressions and emotions is a fundamental and very important skill in the early stages of human social interaction. Humans can observe a person's face and quickly recognize common emotions: anger, joy, shock, disgust, sadness, fear. Communicating this skill to the machine is a complex task. Through decades of engineering design, researchers have attempted to write computer programs that accurately identify a feature, but have to start over and over again to identify features that are only slightly different. What if you don't program the machine, but teach the machine to accurately recognize the emotions?

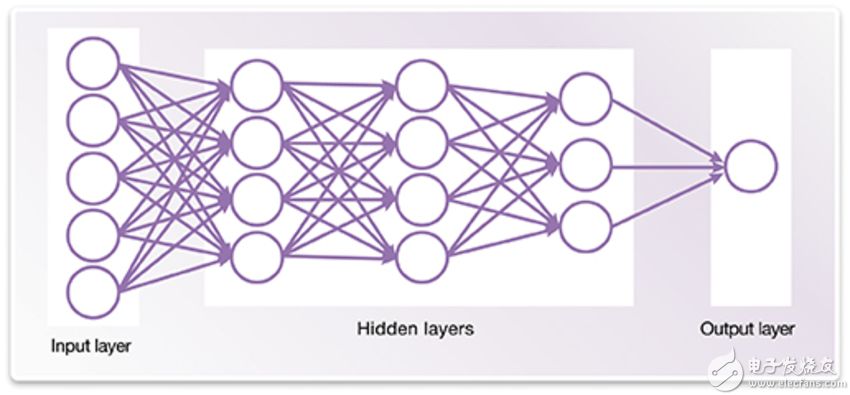

Deep learning skills have shown tremendous advantages in reducing the error rate of computer visual recognition and classification. Implementing a deep neural network in an embedded system (see Figure 1) helps the machine visually resolve facial expressions and achieve human-like accuracy.

Figure 1. A simple example of a deep neural network

The neural network can recognize the pattern through training, and if it has an input and output layer and at least one hidden intermediate layer, it is considered to have "depth" recognition capability. Each node is calculated from the weighted input values ​​of multiple nodes in the previous layer. These weighting values ​​can be adjusted to perform special image recognition tasks. This is called a neural network training process.

For example, in order to train a deep neural network to recognize a happy photo, we show it a happy picture as the raw data (image pixels) on the input layer. Since the result is happy, the network will recognize the patterns in the picture and adjust the node weights to minimize the error in the happy category picture. Every new image with a happy expression and an annotation helps to optimize the image weight. With sufficient input information training, the network can take in unmarked pictures and accurately analyze and identify patterns that correspond to happy expressions.

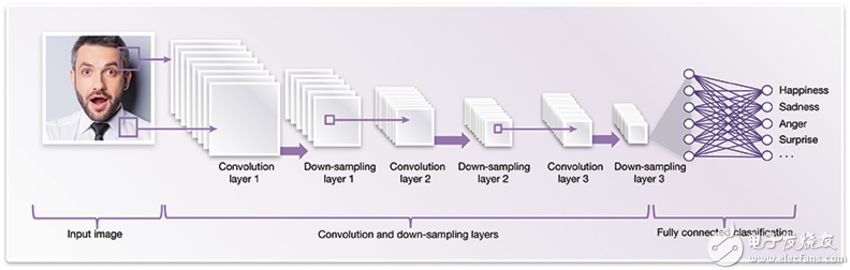

Deep neural networks require a lot of computing power to calculate the weight values ​​of all these interconnected nodes. In addition, data memory and efficient data movement are also important. The Convolutional Neural Network (CNN) (shown in Figure 2) is currently the most efficient implementation of deep neural networks for vision. CNN is more efficient because these networks are able to reuse large amounts of weighted data between pictures. They use the two-dimensional input structure of the data to reduce double counting.

Figure 2. Example of a convolutional neural network architecture (or schematic) for facial analysis

Implementing CNN for facial analysis requires two distinct and independent phases. The first is the training phase. The second is the deployment phase.

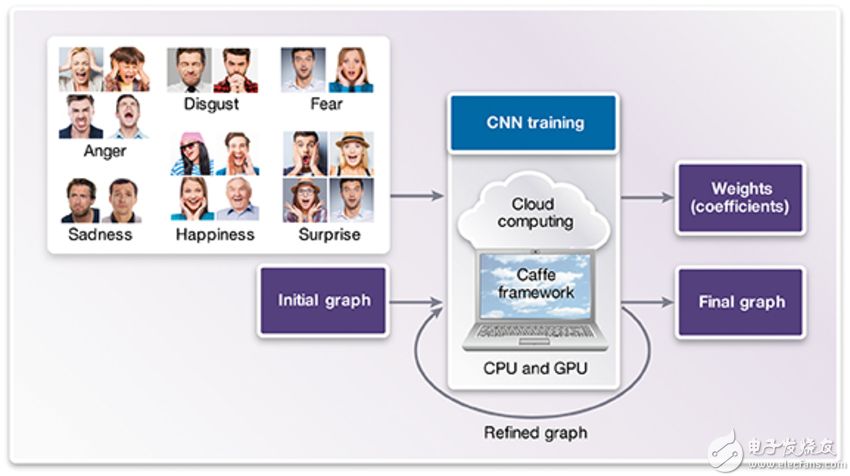

The training phase (shown in Figure 3) requires a deep learning framework – such as Caffe or TensorFlow – which uses CPU and GPU for training calculations and provides framework usage knowledge. These frameworks typically provide examples of CNN graphics that can be used as a starting point. The deep learning framework fine-tunes the graphics. To achieve the best possible accuracy, you can add, remove, or modify levels.

Figure 3. CNN training phase

One of the biggest challenges in the training phase is finding the right data set to train the network. The accuracy of deep networks is highly dependent on the distribution and quality of the training data. Several options to consider for facial analysis are the emotional annotation dataset from the Facial Expression Recognition Challenge (FREC) and the multi-label private dataset from VicarVision (VV).

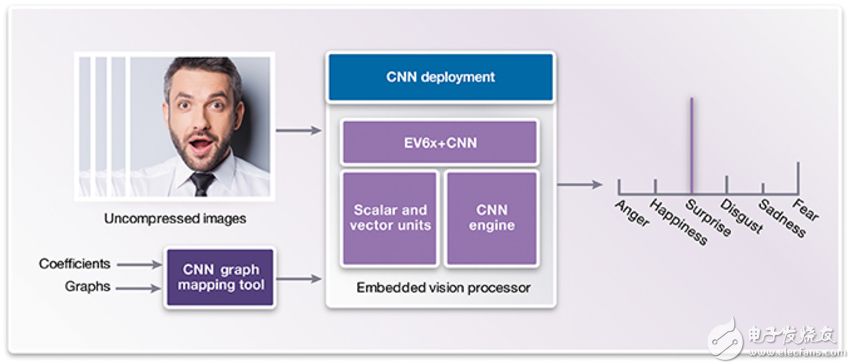

The deployment phase (shown in Figure 4) is for real-time embedded designs that can be implemented on embedded vision processors, such as the Synopsys DesignWare® EV6x embedded vision processor with a programmable CNN engine. The embedded vision processor is the best choice for balancing performance with small area and lower power consumption.

Figure 4. CNN deployment phase

The scalar and vector units are programmed with C and OpenCL C (for vectorization), and the CNN engine does not have to be programmed manually. The final graphics and weights (coefficients) from the training phase can be transferred to the CNN mapping tool, while the embedded visual processor's CNN engine can be configured to perform facial analysis at any time.

Images or video frames captured from the camera and image sensor are sent to the embedded vision processor. In a recognition scene where lighting conditions or facial gestures have significant changes, CNN is more difficult to process, and therefore, image pre-processing can make the face more uniform. Advanced embedded vision processors and CNN and heterogeneous architectures allow the CNN engine to classify images, and vector units preprocess the next image – ray correction, image scaling, plane rotation, etc., while scalar units process decisions (ie How to deal with CNN test results).

The image resolution, frame rate, number of layers, and expected accuracy must take into account the required number of parallel multiply accumulates and performance requirements. Synopsys' CNN EV6x embedded vision processor operates at 800MHz using 28nm process technology while delivering up to 880 MAC performance.

Once CNN is configured and trained to have the ability to detect emotions, it can be more easily reconfigured to handle facial analysis tasks such as determining age range, identifying gender or race, and identifying hairstyles or wearing glasses.

Summary CNN running on embedded vision processors opens up new areas of visual processing. Soon, electronic devices around us that can analyze emotions will be common, such as toys that detect happy emotions, and e-teachers that can identify students' understanding by recognizing facial expressions. The combination of deep learning, embedded visual processing and high-performance CNN will soon turn this vision into reality.

2835 Single Color Led Strip,Single Red Led Light,Single Color Led,Single Blue Led Light

NINGBO SENTU ART AND CRAFT CO.,LTD. , https://www.lightworld-sentu.com