introduction

This article refers to the address: http://

With the rapid development of China's road transportation industry, the number of cars has exceeded 150 million, and it continues to grow. The vigorous development of road transportation has provided strong support for China's transportation industry, but it also brought huge traffic safety hazards. Road traffic accidents have become the first of all kinds of accidents and a major obstacle to the establishment of a safe and sustainable transportation system for transportation. Therefore, it is imperative to establish a road traffic safety guarantee system through technical means to reduce traffic accidents. Based on the analysis of the driver's visual function during driving, this paper introduces and analyzes the research status of various vehicle safety technologies based on vehicle machine vision, and looks forward to the development trend in this field.

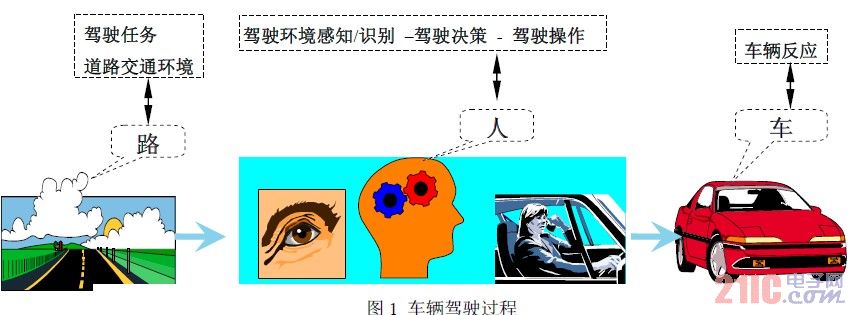

1 Driving process description

According to the stimulation of human behavior-the body-reaction classic mode, the behavior of driving a car can be divided into three stages, as shown in Figure 1, namely the perception stage, the judgment decision stage and the operation stage. In the perception stage, the driver acquires and initially understands the real-time traffic state information, and perceives the operating environment conditions of the car through the sensory organs; in the judgment decision stage, the driver combines the driving experience and skills through the central nervous system analysis and judgment to determine that there is Measures that are conducive to safe driving of the car; during the operation phase, the driver makes actual reactions and actions through the moving organs according to the judgment decision. When the car is driving, the driving behavior is a continuous reciprocating information processing process composed of these three stages, that is, the perception acts on the judgment decision and affects the operation. The perception phase is the basis for safe driving. If you do not perceive accurate and timely environmental information, it is very likely to lead to mistakes in judgments and actions, resulting in traffic accidents. Access to information during the perception phase relies primarily on visual, tactile, olfactory and auditory perceptions, with more than 80% of the information being obtained through the driver's vision. Driving vision directly affects the breadth, depth and accuracy of the amount of perceived information. Therefore, the driver's visual characteristics are directly related to driving safety. Automotive safety-assisted driving technology based on on-board machine vision is designed to improve driver visual performance by improving the relationship between visual and driving behavior and assisting driving to reduce improper operation due to visual reasons, thereby making the human-vehicle-road system more stable. Reliable and improve the active safety of the vehicle.

2 Machine vision assisted driving technology for vehicle external information

The ability of the human eye is limited, and machine vision-assisted driving technology that obtains external information through a series of vehicles can improve visual adaptability, increase visual range, and enhance visual understanding depth. The research of machine vision assisted driving technology, which is divided from the vehicle operation process, the vehicle external information includes: visual enhancement and expansion of the driving environment and machine vision recognition of the driving environment.

2.1 Visual enhancement and expansion of the driving environment and display

2.1.1 Visual enhancement

The visual augmentation system is one of the advanced vehicle control technologies in the intelligent transportation system. It can provide driver vision in different climates (fog, rain, sand) and different times of the day. There are generally two enhancement methods: 1 monitoring the road traffic environment through the sensor sensing system, processing the information to obtain real-time road traffic conditions, and providing relevant visual information to the driver, thereby achieving the purpose of intelligent visual enhancement; 2 by improving driving The visual environment of the staff improves the visual effect of the driver. Mainly to remove the rain and frost on the windshield, improve the intelligentization of the car headlights, etc., to enhance the visual purpose of the driver under adverse conditions such as low visibility and low illumination.

Using the visual characteristics of the human eye, sensors such as CCD, infrared sensor, vehicle speed sensor, GPS and millimeter wave radar are used to acquire road information, perform information processing and fusion, extract useful information of traffic environment under low visibility and low illumination, and eliminate noise, and Provided to the driver in the form of an image. The low-visibility visual augmentation system was first used in aircraft landing. From the late 1980s to the early 1990s, the concept of VisionSystem was proposed. The visual system consisting of different means and different integrated methods is divided into:

(1) Sensor Vision System (SensorVS)

The forward-looking sensor detects the cockpit out-of-sight view in real time, which can be generated by a single sensor or multi-sensor synthesis, and its view is close to the natural scene of the real world.

(2) Synthetic Vision System (SVS)

The virtual view constructed by the terrain model stored by the terrain database is called a synthetic view (SV).

(3) Enhanced Vision System (EVS)

The superposition of the sensor view and the composite view is called Enhancedvision. There are both natural scenes detected in real time and virtual scenes generated by the database. The two are matched and overlapped, that is, the deep contours of the virtual scene are used to enhance the blurred scene, including the SensorVS and SVS systems. The visibility of the viewfinder outside the window can be enhanced under severe weather conditions [1].

2.1.2 Visual expansion

Visual expansion compensates for driver vision, using sensors such as vision to extend the driver's field of view, such as Ford's CamCar, which uses multiple tiny cameras and three switchable video displays to provide front and rear line of sight for the driver. It is convenient for parking operations and improves the safety of driving in crowded traffic. The technical features of CamCar include:

(1) Forward camera system.

Mounted on both sides of the car to provide a view of the obstacles. The coverage angle is up to 22°, which is equivalent to a field of view of 116 m wide at a distance of 300 m.

(2) Enhanced side view.

The second part of the CamCar camera system consists of two rear-facing cameras that provide uninterrupted back-field visibility of adjacent lanes. Its coverage is much wider than traditional rearview mirrors. In this way, the driver can monitor the vehicles coming behind before changing lanes. This backward vision has virtually no blind spots. The rear camera is mounted on the side of the car, similar to a side view mirror. The lens provides a wider field of view with a coverage angle of 49° on each side of the camera.

(3) Panoramic view of the rear of the car.

The CamCar's rear view is enhanced by the precise design of the four miniature cameras mounted behind the car. The four cameras are fanned out with four separate images to capture the road surface in a wide area behind the car. These images are sent to a complex computer program for comparison and superposition, and then a seamless panoramic view is synthesized with a total coverage angle of up to 160°.

2.1.3 Display Technology

Road environment image display and road environment alarm devices are interfaces between the driver and the vehicle, and should be designed with good human factors. At present, there are two main types of information display devices: head-down display and head-up display. The low-head display is mainly used in car navigation systems and multimedia systems, and its design and application are relatively mature. For example, Ford's CamCar has three video displays on the dashboard, a central display and two side-mounted displays. The displayed image can be changed on a case-by-case basis to provide the driver with the most important information. The head-up display is mostly used in the car safety assisted driving display system, which can facilitate the driver to quickly browse the road environment and warning information on the screen when the car is driving at a high speed, and the design is still in the stage of development and improvement.

2.2 Machine vision recognition of the driving environment

The machine vision recognition of the driving environment is a higher level of vehicle safety assisted driving technology. The image sensor is used to identify the road environment parameters and determine the safety of the driving, including: lane detection, vehicle detection, pedestrian detection, road sign detection and the like.

2.2.1 Lane detection

At present, the lane detection is mostly realized by the detection of road markings and road edges. The typical driving safety assistance system in lane detection has a lane departure warning system (LaneDepartureWarning System) and a turning deceleration adjustment system.

The lane departure warning system consists of a camera, a speed sensor, an information processing system, a steering wheel regulator, and an alarm system. Once the vehicle has a tendency to deviate from the lane, the driver will be alerted by an indicator light and a buzzer. When it is determined that the lane change is intentionally made according to the driver's turn signal operation, the alarm is temporarily stopped. The system switch can be turned off, but the system will automatically start working when the vehicle starts again. The lane departure warning system mostly uses monocular cameras to detect road marking images. In order to increase the reliability of the system to detect road markings, the ITS Center of the Japan Automobile Research Institute explores the use of binocular CCD cameras and real-time differential GPS systems to detect the deviation of running vehicles from road markings. Happening.

2.2.2 Vehicle detection

Vehicle detection is the use of various sensors to detect information on the front, side, and rear vehicles, including the speed of the front and rear vehicles, the position, and the size of the obstacles. Related to the car driving safety support system is adaptive cruise control system (ACC, adaptivecruisecontrolsystem), forward collision warning system (FCW, Forward CollisionWarning), lateral collision warning system (LDW, LaterralDriftWarning), parking assistance system (ParkingAssistanceSystem) . In the ACC and FCW, the 77 GHz microwave radar or camera is used to collect the road front information, and the road geometric line shape and electronic map data are integrated as the input signal of the car cruise control or displayed to the driver. In the LDW, the camera, the front detection radar, and the lateral detection radar are used to collect the forward and side information of the vehicle, and the data such as the road width is integrated as the input data of the LDW system. Ultrasonic sensors or radars are used in the parking assist system to detect obstacle information on the rear and side of the vehicle and display them to the driver. In Japan's ASV (Advanced SafetyVehicle), the United States IVI (IntelligentVehicleInitiative), the European e-Safety project ACC, FCW, LDW, parking assistance systems, etc. have been studied.

2.2.3 Detection of traffic signs

Road traffic signs are important road traffic safety ancillary facilities that provide drivers with a variety of guidance and restraint information.

The driver can correctly obtain the traffic sign information in real time to ensure safer driving. The detection of traffic signs in a car safety assisted driving system is achieved by an image recognition system. DaimlerChrysler is currently conducting a new generation of image recognition systems that first determine the shape on the road marking method and then read the text and graphic information in the shape to make the final judgment. When it is difficult to judge the logo, the driver can also use the previously recorded road marker related electronic map data for identification. In the ADAS (Advanced Driver Assistance Systems) project research, BMW also used image recognition technology to conduct traffic sign research. In addition, Toyota Japan also actively developed the automatic identification system for traffic signs. In foreign countries, many researchers have explored many aspects in the research of traffic sign image recognition algorithms. Traffic sign image recognition includes several processes such as traffic sign location (ie, determining the region of interest), classifier design, and the like. The color of the traffic sign and the background and the shape of the traffic sign are clearly defined in the traffic engineering standards, so the positioning research can be carried out according to the color and shape of the traffic sign. Due to the variety of traffic signs, there are many environmental factors affecting the traffic sign image, and most of them are nonlinear classifiers in the design of traffic sign pattern classifiers. Traffic signs form a skeleton and use a matching algorithm to identify traffic signs.

2.2.4 Pedestrian Detection Technology

Pedestrian detection based on computer vision in a vehicle assisted driving system refers to acquiring video information in front of a vehicle using a camera mounted on a moving vehicle, and then detecting the position of the pedestrian from the video sequence. Pedestrian detection system based on computer vision generally includes two modules: ROIs segmentation and target recognition. The purpose of ROIs segmentation is to quickly determine the areas where pedestrians may appear and to narrow down the search space. The current common method is to use a stereo camera or radar based distance-based method, which has the advantage of faster speed. The purpose of target recognition is to accurately detect the position of pedestrians in ROIs. The currently used method is a shape recognition method based on statistical classification, which has the advantage of being robust. Due to its huge application prospects in pedestrian safety, the EU has continuously funded PROTECTOR and SAVE-U projects from 2000 to 2005, and developed two pedestrian detection systems based on computer vision; ARGO smart cars developed by Parma University, Italy. It also includes a pedestrian detection module; Israel's MobilEye has developed a chip-level pedestrian detection system; Honda Motor Co., Japan has developed a pedestrian detection system based on infrared cameras; in Xi'an Jiaotong University, Tsinghua University, and Jilin University, it also does this field. A lot of research work.

3 Machine vision assisted driving technology for vehicle internal information

The machine vision assisted driving technology of the vehicle interior information is to determine the driver's status, position, and the like by the on-vehicle video camera, and implement necessary safety measures, including driver's line of sight adjustment and driving fatigue detection.

3.1 line of sight adjustment

The driver's line of sight adjustment is such that each driver's eyes are at the same relative height, ensuring an unobstructed view of the road surface and surrounding lanes and the best visibility to ensure driving safety. The technology includes:

(1) The eye position sensor can measure the position of the driver's eyes, and then determine and adjust the position of the seat accordingly;

(2) The motor automatically raises and lowers the seat to the optimum height, providing the driver with the best line of sight to grasp the road surface;

(3) The motor automatically adjusts the steering wheel, pedal, center console and even the floor height to provide the driving position as comfortable as possible. In some high-end cars, the line-of-sight adjustment system has been applied. For example, the Volvo line-of-sight adjustment system scans the driver's seat area by a video camera located in the glazing on the wind window to find a pattern representing the driver's face. The driver's face is scanned to determine the position of his or her eyes, and then the center of each eye is found. The time required to complete these three steps is less than 1 second.

3.2 Fatigue and distraction detection

Since fatigue driving is the main cause of major traffic accidents, research institutions in the field have conducted research in this field. Compared with awake driving, the more specific indicators are: fine adjustment of the steering wheel, forward tilting of the head, swaying of the eyelids, and even closure. In the current research on the driving fatigue monitoring system, the on-board machine vision system is used to monitor the human body posture and operational behavior information to determine the fatigue state. The AWAKE driving diagnostic system was developed in the European e-Safety project. The system uses visual sensors and steering wheel steering force sensors to acquire driver information in real time and uses artificial intelligence algorithms to determine the driver's status (awake, possible dozing, dozing). When the driver is in a state of fatigue, the driver is stimulated by sound, light, vibration, etc., to restore the awake state. Literature [34] uses a dedicated camera, electroencephalograph and other instruments developed to accurately measure head movement, pupil diameter change and blink frequency to study driving fatigue problems. Research indicates:

Under normal circumstances, people's eyes are closed between 0.12 and 0.13 s. If the eye closes for 0.15 s during driving, traffic accidents are likely to occur.

4 Conclusion

More than 80% of the driver's information is obtained visually. For the driver's lack of vision, the development of vehicle safety assisted driving system based on vehicle vision has always been one of the research hotspots of intelligent transportation. The paper summarizes the technical status of this field, and the conclusions are as follows:

1) Analyze the driving operation process and describe the three stages of the driving operation;

2) According to the scope of information acquisition, car safety assisted driving is divided into: machine vision technology of machine vision and internal information of external information. The machine vision technology of external information is divided into: visual enhancement, visual field expansion, road environment understanding, and machine vision technology of internal information is divided into: line of sight tracking and driving fatigue monitoring, and summarizes the research status of machine vision technology in vehicle safety assisted driving system;

3) The current research shortage of machine vision technology in vehicle safety assisted driving system is analyzed. It is pointed out that technologies such as low visibility driver vision enhancement method, road environment understanding information fusion and driving fatigue detection need further research.

TPU material,similar material as PU strips,ensure the adhesive property between end caps and IP68 waterproof led srtips.Plastic end caps are anti-uv,and are not easy to be broken,at least can be used for 5 years . With transparent and frosted suface,it won't be obvious shadow to tell apart.We can't make sure the normal length of led strips is proper in anywhere,the end caps are designed to solve these problem.After cutting needless strips,use end caps to plug ,and seal with glue.There are two end caps attched to each led srtip.The caps are only included with strip light to sell,not sold separately as well.

Led Strip Cap,Plastic Caps,Ip68 Strip Cap,Waterproof End Cap

Guangdong Kamtat Lighting Technology Joint Stock Co., Ltd. , http://www.ip68ledstrip.com