The C language compiling and linking process needs to convert a c program (source code) we write into a program (executable code) that can be run on hardware, and needs to be compiled and linked. Compiling is the process of translating textual source code into object files in the form of machine language. Linking is the process of organizing the target file, the operating system startup code, and the used library file to generate the final executable code.

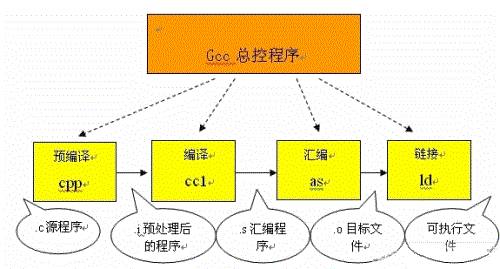

As can be seen from the figure, the compilation process of the entire code is divided into two processes of compiling and linking, compiling parts enclosed in braces in the corresponding figure, and the rest is the linking process.

The process is illustrated as follows:

The compilation process can be divided into two phases: compilation and assembly.

Compiled

Compiling is reading the source program (character stream), analyzing the lexical and syntax, and converting the high-level language instructions into functionally equivalent assembly code. The compilation process of the source file includes two main stages:

The first phase is the preprocessing phase, which takes place before the formal compilation phase. The preprocessing stage modifies the contents of the source file based on the preprocessing instructions that have been placed in the file. The #include directive is a preprocessor directive that adds the contents of the header file to the .cpp file. This way of modifying source files before compilation provides great flexibility to accommodate different computer and operating system environments. The code needed for one environment may be different from the code needed for another environment because the available hardware or operating system is different. In many cases, code for different environments can be placed in the same file, and the code can be modified during the preprocessing phase to adapt it to the current environment.

It mainly deals with the following aspects:

(1) macro definition instructions such as #define a b

For this pseudo-instruction, what precompilation needs to do is replace all a in the program with b, but a as a string constant is not replaced. There is #undef, which will cancel the definition of a macro so that the occurrence of the string is no longer replaced.

(2) Conditional compilation directives such as #ifdef, #ifndef, #else, #elif, #endif, etc.

The introduction of these directives allows programmers to decide which code to process by the compiler by defining different macros. The pre-compiler will filter out unnecessary code based on the relevant files.

(3) The header file contains instructions such as #include "FileName" or #include

In the header file, the #define directive generally defines a large number of macros (most commonly character constants), as well as declarations of various external symbols. The purpose of using header files is mainly to make certain definitions available to a number of different C source programs. Because in the C source program that needs to use these definitions, just add a #include statement and you don't have to repeat these definitions in this file. The pre-compiler will add all the definitions in the header file to the output file it generates for processing by the compiler. The header files included in the c source can be provided by the system. These headers are generally placed in the /usr/include directory. #include them in the program using angle brackets (< >). In addition, developers can also define their own header files. These files are generally placed in the same directory as the c source. At this time, double quotation marks ("") must be used in #include.

(4) Special symbols, pre-compiled programs can recognize some special symbols.

For example, the LINE flag that appears in the source program is interpreted as the current line number (decimal number), and FILE is interpreted as the name of the currently compiled C source program. The pre-compiler program replaces these strings that appear in the source program with appropriate values.

What a pre-compiler program does is essentially "replacement" work on the source program. After this substitution, an output file without macro definitions, no conditional compilation instructions, and no special symbols is generated. The meaning of this file is the same as the source file without preprocessing, but the content is different. Next, this output file will be translated into machine instructions as the output of the compiler.

In the second stage of compilation and optimization, only the constants in the pre-compiled output file, such as the definitions of numbers, strings, and variables, and keywords in the C language, such as main, if, else, for, while, { ,}, +,-,*,\etc.

The work to be done by the compiler is through lexical analysis and grammar analysis. After confirming that all instructions conform to the grammar rules, they are translated into equivalent intermediate code representations or assembly code.

Optimized processing is a more difficult technique in the compilation system. The problems it involves are not only related to the compiler technology itself, but also have a great relationship with the hardware environment of the machine. The optimization part is the optimization of the intermediate code. This optimization does not depend on the specific computer. Another optimization is mainly focused on the generation of object code.

For the former optimization, the main work is to remove common expressions, loop optimization (code extraction, strength reduction, transformation loop control conditions, known amount of merging, etc.), replication propagation, and deletion of useless assignments, and so on.

The latter type of optimization is closely related to the hardware structure of the machine. The most important is to consider how to make full use of the values ​​of the variables stored in the various hardware registers of the machine to reduce the number of visits to the memory. In addition, how to adjust the instructions according to the characteristics of the machine hardware execution instructions (such as pipeline, RISC, CISC, VLIW, etc.) makes the target code shorter and the execution efficiency higher, which is also an important research topic.

compilation

Assembly actually refers to the process of translating assembly language code into target machine instructions. For each C language source program processed by the translation system, this process will eventually be followed to obtain the corresponding target file. What is stored in the object file is also the machine language code of the target equivalent to the source program. The target file consists of segments. Usually there are at least two segments in a target file:

Code segment: This section contains mainly program instructions.

This section is generally readable and executable, but it is generally not writeable.

Data segment: Mainly store various global variables or static data to be used in the program. General data segments are readable, writable, and executable.

There are three main types of target files in the UNIX environment:

(1) Relocatable files

It contains code and data suitable for creating links to other object files to create an executable or shared object file.

(2) Shared object files

This file contains code and data suitable for linking in both contexts. The first is that the linker can process it together with other relocatable files and shared object files to create another object file; the second is the dynamic linker to share it with another executable file and other shared object files. Combine together to create a process image.

(3) Executable files

It contains a file that can be created by the operating system to execute. The assembler actually generates the first type of object file. For the latter two, some additional processing is required. This is the work of the linker.

Linking process

Target files generated by the assembler cannot be executed immediately, and there may be many unresolved issues.

For example, a function in a source file may reference a symbol (such as a variable or a function call, etc.) defined in another source file; a function in a library file may be called in the program, and so on. All these problems need to be solved by the linking program.

The main task of the linker program is to connect the relevant object files to each other, that is, to link the symbols referenced in one file with the definition of the symbol in another file, so that all of these object files become a capable operating system. Into the implementation of the unified whole.

Depending on how the developer specifies the link to the same library function, the link handling can be divided into two types:

(1) Static link

In this way of linking, the function's code will be copied from its location in the statically linked library to the final executable program. This way the code will be loaded into the process's virtual address space when the program is executed. A statically linked library is actually a collection of object files, each of which contains code for one or a group of related functions in the library.

(2) Dynamic Links

In this way, the code of the function is placed in a target file called a dynamic link library or shared object. What the linker does now is to record the name of the shared object and other minor registration information in the final executable program. When this executable file is executed, the entire contents of the dynamic link library will be mapped to the virtual address space of the corresponding process at runtime. The dynamic linker will find the corresponding function code based on the information recorded in the executable program.

For function calls in the executable file, dynamic linking or static linking methods can be used respectively. Using dynamic linking can make the final executable smaller and save some memory when the shared object is used by multiple processes because only one copy of the shared object's code needs to be saved in memory. But not using dynamic links is better than using static links. In some cases, dynamic linking may cause some performance damage.

The gcc compiler we use in Linux is to bundle the above processes, so that the user can use only one command to complete the compilation. This really facilitates the compilation, but it is very disadvantageous for beginners to understand the compilation process. The following figure shows the compilation process of the gcc agent:

From the above figure you can see:

Precompiled

Convert .c files to .i files

The gcc command used is: gcc -E

Corresponds to the preprocessing command cpp

Compiled

Convert .c/.h files to .s files

The gcc command used is: gcc -S

Corresponds to the compile command cc -S

compilation

Convert a .s file to a .o file

The gcc command used is: gcc -c

Corresponds to the assembly command is as

link

Convert .o files to executable programs

The gcc command used is: gcc

Corresponding to the link command is ld

To sum up the compilation process on the above four processes: pre-compile, compile, compile, link. Understanding the work done in these four processes is helpful for us to understand the working process of the header files, libraries, etc., and a clear understanding of the compile and link process also helps us to locate errors during programming and to try to mobilize the compiler when programming. The detection of errors will be of great help.

Litz Wire Typical applications are: high frequency inductor, transformer, frequency converter, fuel cell, the horse, communication and IT equipment, ultrasound equipment, sonar equipment, televisions, radios, induction heating, etc.In 1911, New England became the first commercial manufacturer in the United States to produce the Leeds line.Since then, New England has remained the world leader in providing high-performance Leeds line products and solutions to customers around the world.It is also transliterated as the "litz line".

Litz Wire,Copper Litz Wire,Copper Transformer Litz Wire,High Temperature Litz Wire

YANGZHOU POSITIONING TECH CO., LTD. , https://www.cndingweitech.com